Late last month, the 2016 Lecture Series at the Panetta Institute for Public Policy in Carmel, CA hosted a panel discussion focusing on the topic “Changing Society, Technology and Media.”

Late last month, the 2016 Lecture Series at the Panetta Institute for Public Policy in Carmel, CA hosted a panel discussion focusing on the topic “Changing Society, Technology and Media.”

The panelists included Ted Koppel, former anchor of ABC News’ Nightline, Howard Kurtz, host of FAX News’ Media Buzz, and Judy Woodruff, co-anchor and managing editor of the PBS NewsHour show.

During the discussion, Ted Koppel expressed his dismay over the decline of journalism as a professional discipline, noting that the rise of social media and blogging have created an environment where news and information are no longer “vetted” by professional news-gatherers.

One can agree or disagree with Koppel about whether the “democratization” of media represents regression rather than progress, but one thing that cannot be denied is that the rise of “mobile media” has sparked a decline in the overall number of professional media jobs.

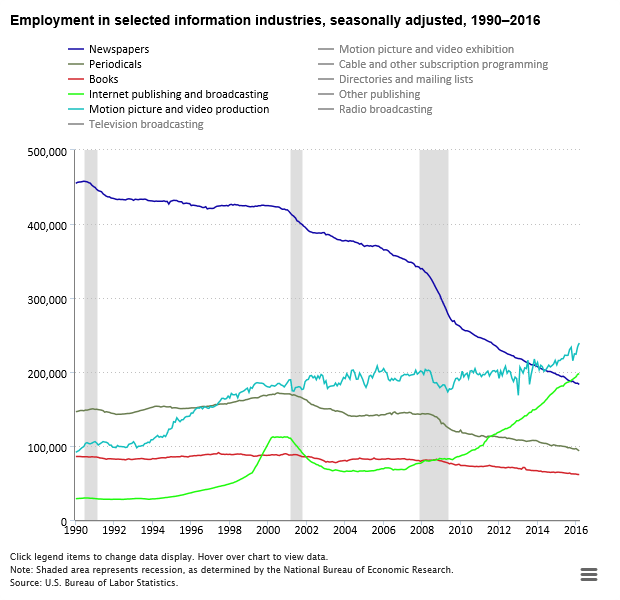

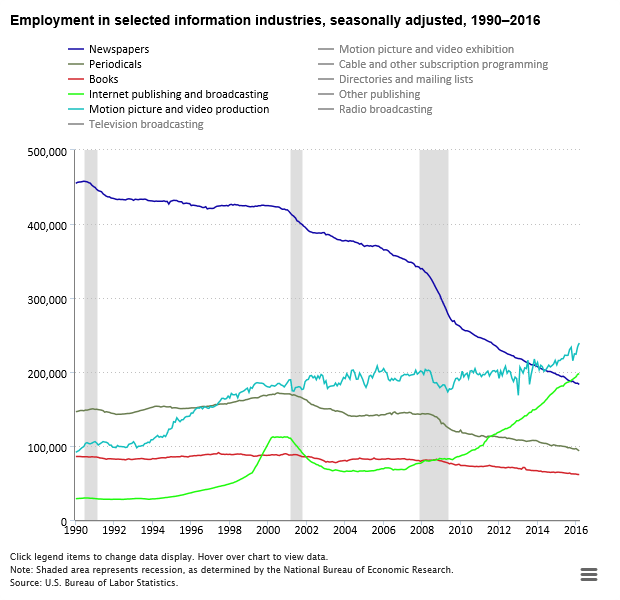

Data from the Bureau of Labor Statistics can quantify the trend pretty convincingly. As summarized in a report published in the American Consumers Newsletter, until the introduction of smartphones in 2007, the effect of the Internet on jobs in traditional media, newspapers, magazines and book had been, on balance, rather slight.

To wit, between 1993 and 2007, U.S. employment changes in the following segments looked like this:

- Book Industry: Net increase of ~700 jobs

- Magazines: Net decline of ~300 jobs

- Newspapers: Net decline of ~79,000 jobs

True, the newspaper industry had been hard hit, but other segments not nearly so much, and indeed there had been net increases charted also in radio, film and TV.

But with the advent of the smartphone, Internet and media access underwent a transformation into something personal and portable. Look how that has impacted on jobs in the same media categories when comparing 2007 to 2016 employment:

- Book Industry: Net loss of ~20,700 jobs

- Magazines: Net loss of ~48,400 jobs

- Newspapers: Net loss of ~168,200 jobs

Of course, new types of media jobs have sprung up during this period, particularly in Internet publishing and broadcasting. But those haven’t begun to make up for the losses noted in the segments above.

According to BLS statistics, Internet media employment grew by ~125,300 between 2007 and 2016 — but that’s less than half the losses charted elsewhere.

All told, factoring in the impact of TV, radio and film, there has been a net loss of nearly 160,000 U.S. media jobs since 2007.

You’d be hard-pressed to find any other industry in the United States that has sustained such steep net losses over the past decade or so.

Much to the chagrin of old-school journalists, newspaper readership has plummeted in recent years — and with it newspaper advertising revenues (both classified and display).

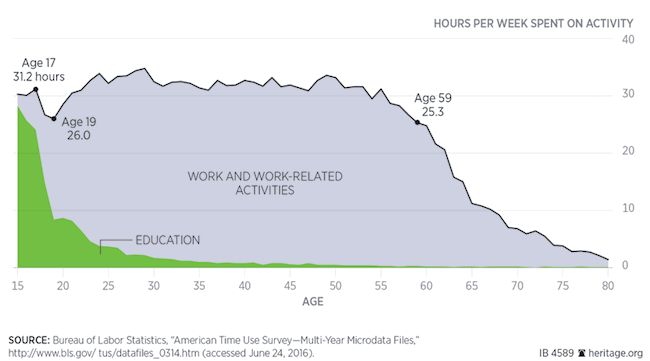

The change in behavior is across the board, but it’s particularly age-based. These usage figures tell it all:

- In 2007, ~33% of Americans age 18 to 34 read a daily newspaper … today it’s just 16%.

- Even among Americans age 45 to 64, more than 50% read a daily newspaper in 2007 … today’s it’s around one third.

- And among seniors age 65 and up, whereas two-thirds read a daily paper in 2007, today it’s just 50%.

With trends like that, the bigger question is how traditional media have been able to hang in there as long as they have. Because if it were simply dollars and cents being considered, the job losses would have been even steeper.

Perhaps we should take people like Jeff Bezos — who purchased the Washington Post newspaper not so long ago — at their word: Maybe they do wish to see traditional journalism maintain its relevance even as the world around it is changing rapidly.