Can the World Economic Forum be any less useful or relevant?

Most people in business and politics have heard of the World Economic Forum (WEF), best known for holding its annual meeting for the world’s glitterati every January in Davos, Switzerland.

Most people in business and politics have heard of the World Economic Forum (WEF), best known for holding its annual meeting for the world’s glitterati every January in Davos, Switzerland.

Beyond that international confab, WEF provides a set of “Transformation Maps” on its website which are described as “a constantly refreshed repository of knowledge about global issues, from climate change to the future of work.”

“Transformation Maps are the World Economic Forum’s dynamic knowledge tool,” the website declares. “They help users to explore and make sense of the complex and interlinked forces that are transforming economies, industries and global issues.”

The maps present insights written by so-called experts along with machine-curated content. Together, “the information allows users to visualise and understand more than 250 topics and the connections and inter-dependencies between them, helping in turn to support more informed decision-making by leaders.”

… And it’s all provided as free content!

Taking a current topic of interest as an example, if one wishes to find out about Ukraine, he or she can click on a link beneath a Transformation Map labelled “Fourth Industrial Revolution” which brings up a new page: https://intelligence.weforum.org/topics/a1Gb0000001RIhBEAW?tab=publications. From there, select Discover All Topics from the top menu which brings up this new page: https://intelligence.weforum.org/topics.

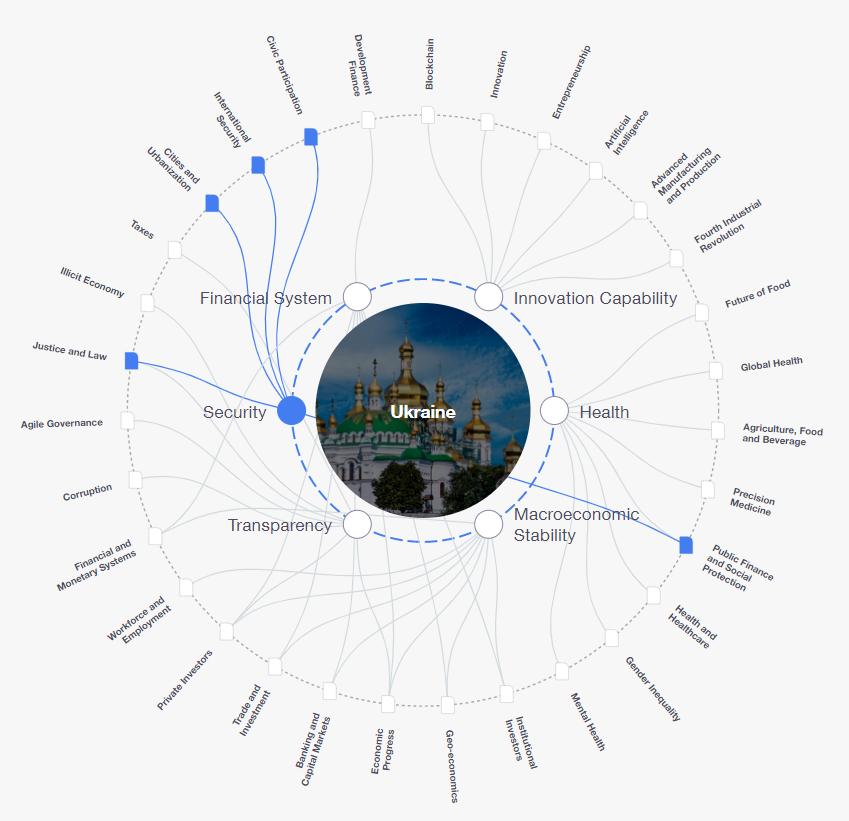

That page displays 293 different topics. Scrolling down a good ways finally brings up “Ukraine” … and here’s what is displayed after clicking on the “Security” issue:

Explaining how the chart was developed, the website reports:

“This Transformation Map explores key issues for Ukraine based on its rankings in the most recent edition of the World Economic forum’s Global Competitiveness Index.”

For the record, those six “key issues” are Financial System, Innovation Capability, Health, Macroeconomic Stability, Transparency and Security.

The Security issue then links to five other topics (Public Finance and Social Protection, Justice and Law, Cities and Urbanization, International Security and Civic Participation).

Curious to see what content would be displayed, I clicked the International Security topic, which causes the International Security Transformation Map to appear. Only then did I finally learn that:

“The return of great power competition has been accompanied by the outbreak in Ukraine of Europe’s largest ground war since World War II.”

I must admit that I am quite impressed with how these Transformation Maps have helped me to visualize and understand the connections and inter-dependencies between Ukraine and International Security … and it is now completely clear to me how these tools support more informed decision-making by leaders. [Feel free to insert snark emoji here.]